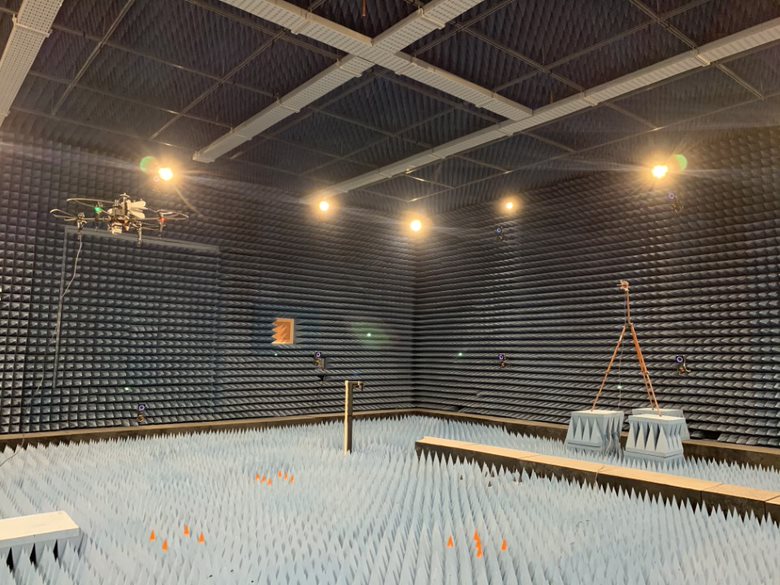

Exploration of Anechoic Chamber Characterization with Autonomous Unmanned Aerial Systems

A. Adhyapak, M. Diddi, M. Kling, A. Colby and H. Singh. 2021 15th European Conference on Antennas and Propagation (EuCAP), Dusseldorf, Germany, 2021, pp. 1-5, doi: 10.23919/EuCAP51087.2021.9411389.

Abstract: A novel system for chamber characterization by Fourier Analysis technique is explored. The system consists of a probe antenna mounted on top of an Unmanned Autonomous Vehicle (UAV) equipped with precise positioning, multi-camera tracking system, ground control station along with the fixed transmit antenna and vector network analyzer. The vector measurements are conducted in the ECUAS lab at Northeastern University wherein the probe antenna is scanned across a planar region in the quiet zone of the chamber. Multiple control techniques for UAV navigation are analyzed and the parameters are tuned to optimize the flight trajectory. The Fourier Analysis technique is applied to the scan results from the optimum trajectory for both polarizations to yield the reflectivity of the chamber and locate the reflections' hotspots.

Dynamic Channel Selection in UAVs through Constellations in the Sky

Reus Muns, G., Diddi, M., Singh, H. and Chowdhury, K.R., 2020. Dynamic Channel Selection in UAVs through Constellations in the Sky.

Abstract: Wireless communication between an unmanned aerial vehicle (UAV) and the ground base station (BS) is suscep- tible to adversarial jamming. In such situations, it is important for the UAV to indicate a new channel to the BS. This paper describes a method of creating spatial codes that map the chosen channel to the motion and location of the UAVs in space, wherein the latter physically traverses the space from a given so called “constellation point” to another. These points create patterns in the sky, analogous to modulation constellations in classical wireless communications, and are detected at the BS through a millimeter-wave (mmWave) radar sensor. A constellation point represents a distinct n-bit field mapped to a specific channel, allowing simultaneous frequency switching at both ends without any RF transmissions. The main contributions of this paper are: (i) We conduct experimental studies to demonstrate how such constellations may be formed using COTS UAVs and mmWave sensors, given realistic sensing errors and hovering vibrations, (ii) We develop a theoretical framework that maps a desired constellation design to error and band switching time, consid- ering again practical UAV movement limitations, and (iii) We experimentally demonstrate jamming resilient communications and validate system goodput for links formed by UAV-mounted software defined radios.

AirBeam: Experimental Demonstration of Distributed Beamforming by a Swarm of UAVs

Mohanti, S., Bocanegra, C., Meyer, J., Secinti, G., Diddi, M., Singh, H. and Chowdhury, K., 2019, November. AirBeam: Experimental Demonstration of Distributed Beamforming by a Swarm of UAVs. In 2019 IEEE 16th International Conference on Mobile Ad Hoc and Sensor Systems (MASS) (pp. 162-170). IEEE.

Abstract: We propose AirBeam, the first complete algorithmic framework and systems implementation of distributed air-to-ground beamforming on a fleet of UAVs. AirBeam synchronizes software defined radios (SDRs) mounted on each UAV and assigns beamforming weights to ensure high levels of directivity. We show through an exhaustive set of the experimental studies on UAVs why this problem is difficult given the continuous hovering-related fluctuations, the need to ensure timely feedback from the ground receiver due to the channel coherence time, and the size, weight, power and cost (SWaP-C) constraints for UAVs. AirBeam addresses these challenges through: (i) a channel state estimation method using Gold sequences that is used for setting the suitable beamforming weights, (ii) adaptively starting transmission to synchronize the action of the distributed radios, (iii) a channel state feedback process that exploits statistical knowledge of hovering characteristics. Finally, AirBeam provides insights from a systems integration viewpoint, with reconfigurable B210 SDRs mounted on a fleet of DJI M100 UAVs, using GnuRadio running on an embedded computing host.

Experimental Imaging Results of a UAV-mounted Downward-Looking mm-wave Radar

Zhang, W., Heredia-Juesas, J., Diddi, M., Tirado, L., Singh, H. and Martinez-Lorenzo, J.A., 2019, July. Experimental Imaging Results of a UAV-mounted Downward-Looking mm-wave Radar. In 2019 IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting (pp. 1639-1640). IEEE.

Abstract: Optical and thermal sensors mounted on Unmanned Aerial Vehicles (UAVs) have been successfully used to image difficult-to-access regions. Nevertheless, none of these sensors provide range information about the scene; and, therefore, their fusion with high-resolution mm-wave radars has the potential to improve the performance of the imaging system. This paper presents our preliminary experimental results of a downward- looking UAV system equipped with a passive optical video camera and an active Multiple-Input-Multiple-Output (MIMO) mm-wave radar sensor. The 3D imaging of the mm-wave radar is enabled by collecting data through the line of motion, thus producing a synthetic aperture, and by using a co-linear MIMO array perpendicular to the motion trajectory. Our preliminary results shows that the fused optical and mm-wave image provides shape and range information, that ultimately results in an enhanced imaging capability of the UAV system.